It wouldn’t be wrong to call Steve Engels a musician. After all, he spent eight years of his childhood studying piano, then graduated to guitar, dabbling along the way with the clarinet and drums. “I sampled a lot from the buffet,” he says.

But Engels’ music teachers could hardly have foreseen where all his training would lead. Today, the U of T computer science professor is not so much a musician as a musical facilitator: someone who gets machines to compose and play music in ways that he, a mere mortal, cannot.

Some might say he’s entered uncharted territory. While it’s generally accepted that artificial intelligence excels in many respects – it can translate text into any language and beat us at games such as chess and Jeopardy – it can’t possibly summon the imagination necessary to create art. Or can it?

Engels thinks it can. “Artificial intelligence has the same capacity for creativity that humans have,” he says. To teach a computer to play music, he feeds it multiple samples of musical composition, which the computer studies for patterns. It can then generate new music in the same style. While the resulting tune may not be completely original, Engels argues that humans, who have also been known to produce a derivative work or two, follow a similar process – whether we’re conscious of it or not. “When it comes to creativity, are any of us completely removed from other inspirations?”

Engels got his start in computer-generated music several years ago by creating rudimentary software (based on a simple machine-learning technique) that could scramble and spew out a series of new patterns inspired by a single piece of music. The initial composition, he admits, was ugly. With more work on the program, the music got slightly better: “It sounded like a distracted jazz pianist.”

Since then, he’s been able to integrate more than one musical source into his program and incorporate increasingly complex chord progressions. The three base elements he works with are the timing, pitch and duration of chords or notes. What the program does is recombine the chords or notes in novel ways. Under the guidance of one of his students, a computer in the department recently composed four straight hours of “new” Bach music, using the great composer’s preferred musical structures.

Bach is known as a highly mathematical composer, which was why he was chosen for this project – more rational, one might say, than delicate Mozart or passionate Beethoven. I ask Engels if he thinks computer compositions lack emotion. “Ah,” he smiles, as if I’ve just stumbled on his missing house keys. “We haven’t programmed that layer in yet.” A machine, he explains, can only work off the quantity and quality of data it’s fed. And so far nobody has figured out a way to program feelings into machines, though Engels thinks in time it will be possible.

Then he asks me to consider something else: when it comes to art, it’s really human response – not machine intention – that matters anyway. “What makes one person smile won’t have that effect on another. Human artists have this problem all the time, right? So that’s where it becomes very difficult to say we’re going to make people cry, or laugh.”

As a form of artistic expression, music has a limited but exceptionally powerful vocabulary. Whether put together by human or computer, a handful of notes can, on their own, evoke an emotional response in the listener. That’s much harder to do with words.

Accordingly, when Los Angeles–based singer and Internet personality Taryn Southern recorded IAMAI, she structured the album (set to be released in May) as a collaborative effort. She wrote the heartfelt human lyrics, while programs with names such as Watson Beat and Amper Music came up with tunes that matched her moods. It’s said to be the first pop album composed and produced by artificial intelligence.

Southern’s music doesn’t sound in any way inhuman. In its predictable chord changes and superficial melancholy, it actually resembles most current pop music. It puts one in mind of a scene that Engels likes to evoke from the Isaac Asimov–inspired movie I, Robot. When Will Smith’s character asks a robot if it can write a symphony, the robot responds: “Can you?” The implicit answer is, well, sure – with enough time and training. But most of us won’t produce anything that’s very good.

Recently, U of T computer science professors Sanja Fidler and Raquel Urtasun, and PhD student Hang Chu, created computer-generated musical compositions with lyrics. Chu and the team trained a neural network on images and their captions, song lyrics and 100 hours of online music to create a program that could analyze a digital photograph and then write a song about it. One piece I heard, based on a Yuletide photo, is musically creepy – and lyrically very off. “I’ve always been there for the rest of our lives,” sings the machine. “A hundred and a half hour ago.” (One Internet commentator noted, though: “It’s still better than Paul McCartney’s ‘Wonderful Christmastime.’”)

Where words are concerned, it may be that AI is just in its “distracted jazz pianist” phase and things will get better. But Adam Hammond, a U of T professor of English literature, doesn’t think so. “I can’t imagine a world in which we turn to artificially generated stories,” he says. “Stories don’t mean anything to computers, so why would stories written by computers mean anything to us?”

Though an expert in the century-old works of British Modernist authors, Hammond champions the use of digital tools to analyze literature. Working with Julian Brooke, a professor at the University of British Columbia who specializes in computational linguistics, he’s developed algorithms that can plumb the depths of existing texts – discovering stylistic properties that humans might not notice but that tell us much about the way humans write.

Last year, he and Brooke used an algorithm to analyze common thematic elements in 50 science fiction stories given to them by author Stephen Marche (MA 1998, PhD 2005). (The stories were written by masters of the form, such as Ursula LeGuin and Isaac Asimov.) The computer then supplied Marche with 14 theme-related rules he could use to write the “ultimate” science fiction story. These included a directive to use “extended descriptions of intense physical sensations and name the bodily organs that perceive these sensations.” Marche also received separate style guidelines, such as to use a lot of adverbs.

The process wasn’t AI per se, in the sense that Marche (not the algorithm) did the actual writing. But “it showed how humans can collaborate with algorithms to create new kinds of art,” Hammond says.

Hammond does think a computer could write a story on its own – just not a very good one. “Every night we humans go to sleep and make up stories in dreams,” he says. “There’s some kind of biological imperative to make sense of our lives through storytelling. Computers don’t have that.” Indeed, AI makes us realize that as consumers of art we may be connecting subconsciously with the artist’s need to make it.

But Hammond still thinks AI can spur creativity, or help writers think up themes. Computers are already fairly good copy editors; his experiment shows they can act as substantive editors as well, by supplying rules and detecting irritants such as prejudice or excessive repetition on the writer’s part.

Neither Hammond nor Engels believe that AI will affect artists’ jobs in the way that it has already eliminated others. Engels says his software was designed to “help people who wished to hire composers, but couldn’t. We never wanted to take anything away; we wanted to give something to people who couldn’t have it otherwise.”

Still, he looks at programs such as Google’s DeepDream (which creates original artworks by training off of existing paintings) and wonders at the possibilities. “There are a lot of people who can’t hire artists who now go to Google Images and pull down things from the Creative Commons,” says Engels. “Wouldn’t it be great if you needed an original piece of art, and you didn’t want to download what everybody else was downloading? You could provide the software with a sample of what you’re looking for, and get an unending stream of music in that style.”

Downloading art, as opposed to writing, painting or singing it, may seem depressingly mechanistic to some. But the only real question is: Does your human mind appreciate it? If so, there isn’t much arguing with that.

Can you tell the difference between music composed by Johannes Sebastian Bach and music composed by an AI algorithm trained on Bach’s music? Here are one-minute samples of each:

A

B

Scroll down for answer… but vote first!

The real Bach is A.

Recent Posts

U of T’s Feminist Sports Club Is Here to Bend the Rules

The group invites non-athletes to try their hand at games like dodgeball and basketball in a fun – and distinctly supportive – atmosphere

From Mental Health Studies to Michelin Guide

U of T Scarborough alum Ambica Jain’s unexpected path to restaurant success

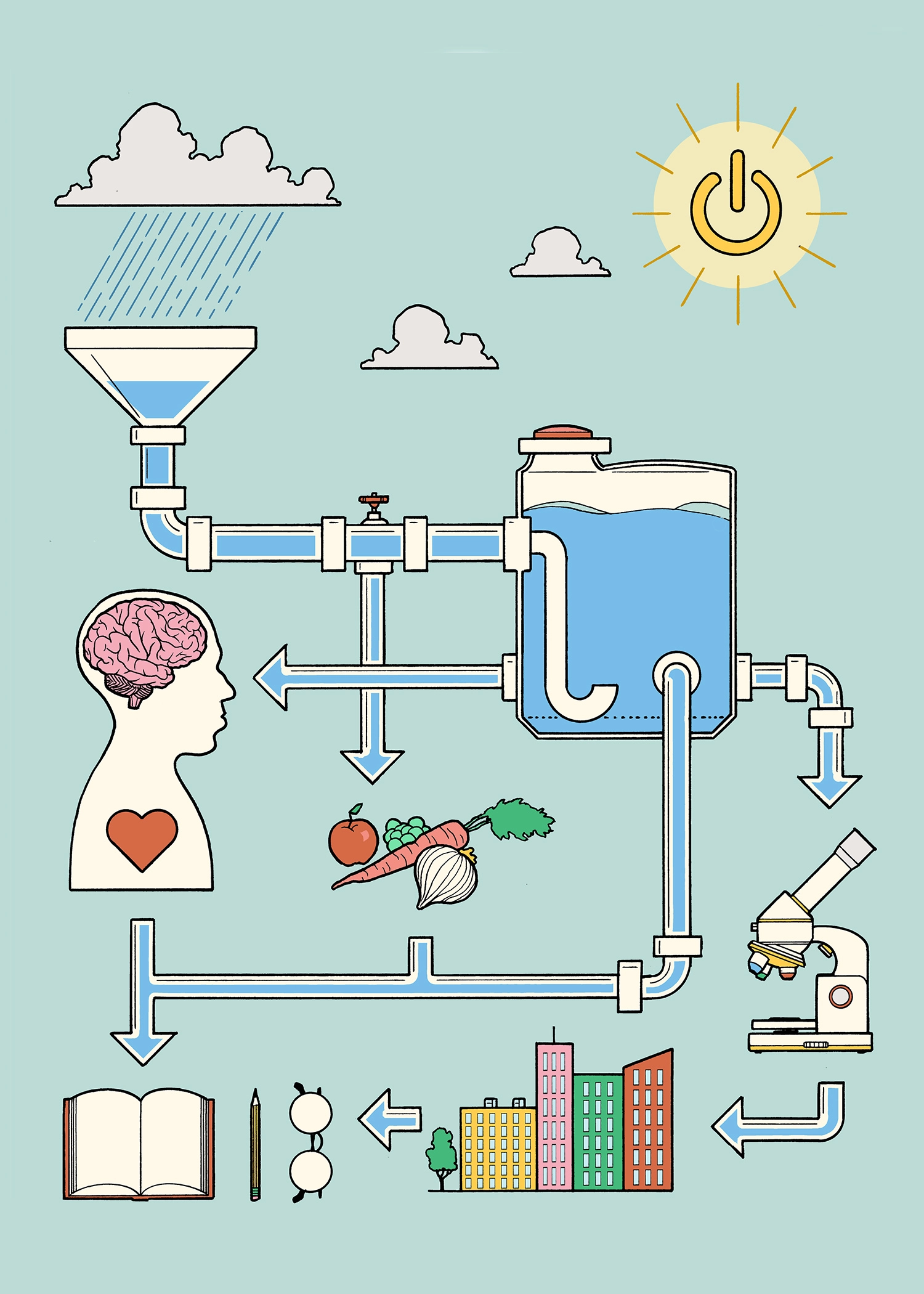

A Blueprint for Global Prosperity

Researchers across U of T are banding together to help the United Nations meet its 17 sustainable development goals

2 Responses to “ Alexa, Compose Me a Song ”

I seem to recall that Raymond Scott was doing something like this in the 1960s. Give Manhattan Research Inc. a listen. Quite a variety of things are featured in this set, including Scott letting the machines do the composing. I cannot guarantee the results are to all tastes.

I listen to Bach all the time so this was a fairly easy test.