Our Autumn 2022 cover story features Ilya Sutskever, a University of Toronto alum and the chief scientist and co-founder of OpenAI in San Francisco. The company’s flagship product is DALL-E 2, a system that can create original images based on a text description.

Type in “magazine editor in a red hat against a blue background, in art deco style” and, in a few seconds, you get this.

Change the style to art nouveau and almost instantly DALL-E 2 has altered the image to this:

Wouldn’t it be cool, one of our team suggested, to have DALL-E 2 design our cover? What better way to design a cover featuring a story about an AI than by using that very same AI?

In practice, though, it proved difficult. (That said, cover design is rarely easy.)

As with any tool, it takes time to learn how to use DALL-E 2. Three members of our team spent several hours generating dozens of images. But we had difficulty creating exactly what we wanted. Part of the challenge was knowing how to prompt the program to deliver the desired image. For us, this involved a lot of trial and error. (Coming up with a good concept was another big part of the challenge, quite independent of DALL-E 2.)

To get across the idea of an AI that “truly understands us,” for example, we asked DALL-E 2 to show us a robot reading a book – as if it were studying up on humans. It generated several Terminator-like machines, so we asked for a “friendly robot.”

Cute, but nothing suitable for a cover.

Who else truly understands us? A psychiatrist. We played with the idea of a robot psychiatrist treating a human “patient.” Here things got a little weird. More often than not DALL-E 2 made the robot the patient. It had trouble with eyes. And it sometimes didn’t know what should be in the image. A shopping bag?

Combining AI and language quite literally, we prompted DALL-E 2 with: “a lot of tiny words that form the shape of an android’s face.”

We still didn’t have anything close to a viable magazine cover.

“Our prompts weren’t very successful,” observed Vanessa Wyse, the magazine’s creative director and the founder of Studio Wyse. “I couldn’t think of enough descriptors. And then the more description you added, the more complex the image got.”

The studio’s photo editor, Della Rollins, who also works on the magazine, had a similar experience. “I had a hard time controlling it,” she said. “I felt I had a clear vision of what I wanted to see, but the computer couldn’t create it. Maybe I didn’t have the language for it, but it was a little frustrating.”

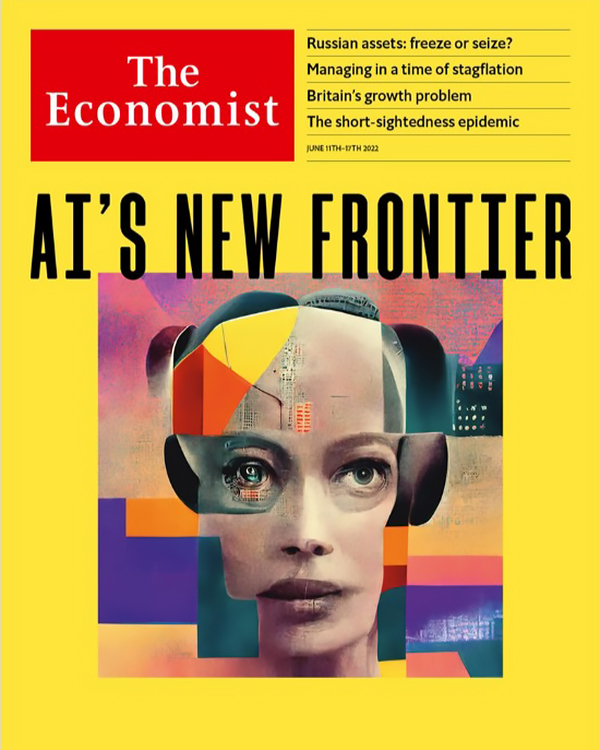

And, as Wyse points out, we arrived a bit late to the DALL-E 2 party. Other magazines, ranging from Cosmopolitan to The Economist, had already used AI to design their cover – “which highlighted just how elementary our attempts were.” (Though I’m not sure the image on The Economist cover is all that advanced.)

Thankfully, DALL-E 2 comes with an editing function that allows you to erase part of the image and put something else in its place. But it seemed a bit glitchy. “It was helpful, but it didn’t always know what to do,” said Wyse. “I tried to [change a picture] to give a koala bear a beer but it couldn’t figure it out.”

And yet – although we ran up against several limitations using DALL-E 2, we were still somewhat awed by its capabilities.

“The idea that you can type in some words, and it spits out a picture (four pictures, in fact) in a certain style in a matter of seconds is extremely impressive,” said Wyse.

Rollins agreed. “It’s like a baby right now, so it’s not understanding everything,” she said. “But it will continue to get smarter and smarter and at the same time the people using it will continue to get smarter about how to use it. In the long term, the capabilities are pretty incredible.”

A photographer, she was fascinated by how you could ask DALL-E 2 to create a photograph specifying a certain lens and a focal point.

One question that came up as we were experimenting: could DALL-E 2 render an image in the style of a particular artist? To find out, Rollins asked it to generate Toronto’s skyline in the style of French artist Malika Favre, who has done a lot of work for The New Yorker.

Pretty good. But is it ethical? Wyse thinks not. “An artist spends their whole career developing a particular look or aesthetic. I don’t think it’s right to ask an AI to replicate it.” (She notes that human illustrators are sometimes asked to do this as well – to mimic another artist’s work: “Also unethical.”)

Could an AI develop its own style? Possibly one day, but the technology isn’t there yet. As Ilya Sutskever notes in our cover story, DALL-E 2 is creative, “but it can’t come up with a whole new aesthetic in the way that a genius like Picasso did.”

Who is likely to use DALL-E 2?

Wyse said she could see DALL-E 2 being used where stock art is common now: bloggers who need something to accompany one of their posts; advertising, corporate reports.

In fact, we used stock art in this issue, with the president’s message. It was a simple illustration, so Wyse gave DALL-E 2 a quick prompt and it produced this.

Getty Images sells its illustration for between $175 and $575, depending on the size you want. DALL-E 2 produced these four for free (there’s no charge to use it at the moment). My guess is that Getty Images and other stock art houses are probably worried right now.

(We also used DALL-E 2 successfully to create several illustrations for this companion piece about using another AI. Without it, we likely would have looked for suitable stock art.)

Rollins also sees potential to use DALL-E 2 in storyboarding, in filmmaking. “But as a documentary photographer, I don’t see it replacing people taking pictures of actual events.”

Overall, we agreed that the promise of DALL-E 2 as a tool for artists, designers, and other creative types is immense. Rollins recalled that when the internet first started, there was a lot of hype. When it initially failed to meet (very high) expectations, people were disappointed. But fast forward 25 years and it’s integrated into almost everything we do. As she noted, “This is just the start for AI.”