In an apparent interview with the talk show host Joe Rogan a year ago, Prime Minister Justin Trudeau denies he has ever appeared in blackface, responds to rumours that Fidel Castro was his father, and says he wishes he had dropped a nuclear bomb on protesters in Ottawa.

The interview wasn’t real, of course, and was apparently intended to be humorous. But the AI-generated voice of Trudeau sounded convincing. If the content had been less absurd it would have been difficult to distinguish from the real thing.

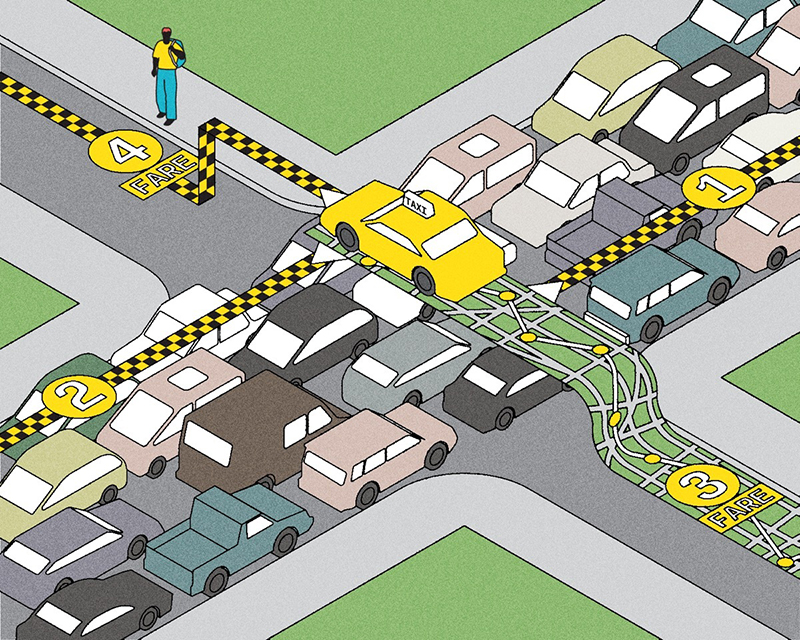

The video highlights the growing danger that artificial intelligence could usher in a new era in disinformation – one that will make it easier than ever for malign actors to spread propaganda and fake news that seems authentic and credible. Recent advances in generative AI have made it much easier to create all sorts of convincing fake content – from written stories to replicated voices and even fake videos. And as the technology gets cheaper and more widespread, the danger increases.

“It’s probably one of the things that I’m most worried about right now,” says Ronald Deibert, director of the Citizen Lab at the Munk School of Global Affairs and Public Policy. “I think it’s going to create all sorts of chaos and havoc, and amplify a lot of the problems that we’re seeing around misinformation and social media,” he says.

AI tools such as ChatGPT allow people to write articles about specific subjects in a particular style. For instance, U.S. researchers were able to get the program to draft convincing essays arguing that the school shooting in Parkland, Florida, was faked, and that COVID-19 could cause heart problems in children. “You can simply plug in a prompt and the entire article can be created. That makes it just so much easier,” Deibert says. “It’s hard to discern if something is fake or authentic.”

Impersonating a voice is also straightforward. The people who made the Trudeau fake interview said they used a service called ElevenLabs. The company’s website offers the ability to generate a realistic human voice from a typed script, and also has the option of “cloning” a voice from a recording.

A technology like this may have been used in January during the New Hampshire presidential primaries, when a robocall in the voice of President Joe Biden urged Democrats not to vote. The New Hampshire Attorney General’s office said the recording seemed to use an artificially generated voice.

Perhaps even more concerning are deepfake videos, which can be made to show a lookalike version of a real person doing or saying almost anything. For instance, a video that appeared last year seemed to depict Hillary Clinton on MSNBC endorsing then-Republican presidential candidate Ron DeSantis. Although the face seemed slightly rubbery, the video was fairly convincing – until the end, when Clinton says, “Hail, Hydra!” – a reference to an evil organization from Marvel comics and movies.

The stakes can be high. In 2022, a deepfake video of Ukrainian President Volodymyr Zelenskyy appeared to show him urging Ukrainian soldiers to put down their arms and surrender.

We need to rethink the entire digital ecosystem to deal with this problem.”

In the recent past, creating forged documents, photos or articles took considerable time and effort. Now, generating synthetic media is simple, widely available and cheap. One researcher, whose work is widely known but who has not disclosed his identity, built and demonstrated an AI-enabled platform called Countercloud that was able to create a disinformation campaign – complete with fake news articles and extensive social media support – with only a few prompts. “So what you have is the means to generate authentic, credible-looking content with the push of a button,” Deibert says. This substantially lowers the barriers for malicious actors who want to wreak havoc.

Deibert and his colleagues at the Citizen Lab have documented several sophisticated disinformation campaigns on social media. They recently released a report by researcher Alberto Fittarelli on an effort they call Paperwall, in which at least 123 websites run from within China impersonate legitimate news sites from around the world, running stories favourable to Beijing. Previous work by the lab has uncovered sophisticated disinformation campaigns run on behalf of Russia and Iran.

Deibert isn’t the only one raising the alarm about AI and disinformation. Publications from the New York Times to Foreign Affairs have run articles about the problem – and possible solutions. Among them are technical approaches, such as “watermarks” that allow users to see if information has been generated by an AI, or artificial intelligence programs that are capable of detecting when another AI has created a deepfake. “We will need a repertoire of tools,” Deibert says – “often, the same tools the bad actors are using.”

Social media companies also need to devote more resources to detecting and eliminating disinformation on their platforms. This might require government regulation, he says, though he acknowledges that this comes with the risk of government overreach. He also calls for increased regulation around ethical use of and research into AI, noting this would apply to academic researchers as well.

But Deibert thinks a broader solution is needed, too. A big part of the problem, he says, are social media platforms that depend on creating extreme emotions in users to keep them engaged. This creates a perfect breeding ground for disinformation. Convincing social media companies to turn the emotional volume down – and educating citizens to be less prone to manipulation – may be the best long-term solution. “We need to rethink the entire digital ecosystem to deal with this problem,” he says.

This article was published as part of our series on AI. For more stories, please visit AI Everywhere.

Recent Posts

People Worry That AI Will Replace Workers. But It Could Make Some More Productive

These scholars say artificial intelligence could help reduce income inequality

A Sentinel for Global Health

AI is promising a better – and faster – way to monitor the world for emerging medical threats

The Age of Deception

AI is generating a disinformation arms race. The window to stop it may be closing

One Response to “ The Age of Deception ”

Ross Eddie writes:

Surely the intelligent response to a prompt to argue that the Parkland Florida shooting was faked would have been to reply 'that suggestion is disingenuous and hurtful,' and to refuse to make the argument.