A mathematics professor has 5,280 exams to mark. The exam is 16 pages long and divided into three sections – each of which must be graded by a different person. Each exam is stapled together, meaning sections can’t be separated and graded concurrently. A total of 100 volunteers – teachers, graduate students, professors – have agreed to help with the marking.

Question: How long will it take to mark all the papers? Prof. James Colliander did the math on the above scenario. His answer: Too long.

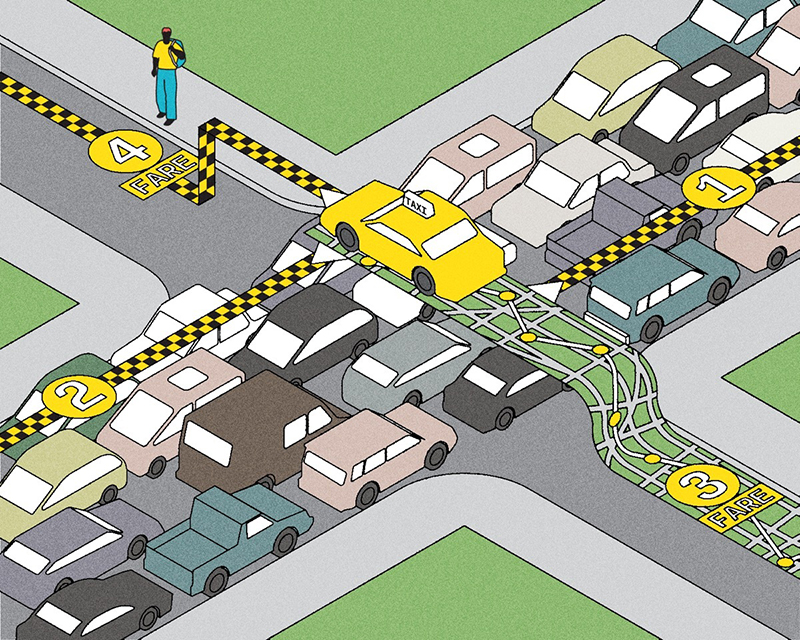

In 2011, U of T partnered with the Canadian Mathematical Society on a student competition that resulted in the marking scenario above. “We had the logistical nightmare of trying to shuffle these papers in front of the right eyeballs. I saw all kinds of inefficiencies – people waiting for their section while other people were marking theirs,” recalls Colliander, a professor of mathematics. “And I realized this problem was not isolated – it was a general problem.”

This experience inspired Colliander to create Crowdmark, a cloud-based software service that makes collaborative marking easier, and leaves more time for teachers to provide thoughtful feedback and commentary.

Here’s how Crowdmark works: Suppose 30 students will be taking a 10-page exam. The teacher scans and uploads the 10 pages. Crowdmark adds unique Quick Response codes to generate machine-trackable versions of the exam for each student. The teacher prints the 300 pages of encoded exams, students complete them, and the papers are scanned and re-uploaded to Crowdmark.

The QR codes allow the pages to be sorted and displayed online. The interface makes it simple and fast to mark the same question on 30 exams, or to mark a single student’s work from start to finish. Many graders can respond to the same student’s work, and compare their remarks to find the most effective feedback. Teachers can leave comments for students, parents or for other teachers – with privacy settings so that each group only sees comments meant for them.

Crowdmark has gone through two major “proof-of-concept” tests, one of which was the 2011 student competition. It took about 700 hours to mark those exams with pen and paper. The next year, markers used Crowdmark to reduce that time by half.

A second test, at Golf Road Junior Public School in Toronto, yielded similar results. “Teachers were able to navigate the tests much faster than if handling them physically,” says Joseph Romano, the school’s lead information and communications technology teacher. Romano adds that he is already developing plans to build Crowdmark into the school’s day-to-day practice, despite the fact that it won’t be commercially available for another few months.

“I often mention efficiency as the first benefit, but in talking with instructors, I have learned of possibly greater benefits that I didn’t anticipate,” says Colliander. “The archive of feedback, for example, is extremely important. With Crowdmark, a student’s essay and the teacher’s feedback are both stored. So the teacher can prompt the student at exam time, ‘Please look back at the comments on that essay.’”

Colliander plans to start selling the service to individuals and institutions later this academic year. He knows he has work ahead of him to sell Crowdmark, but his confidence is buoyed by the fact that he’s doing what mathematicians do best: solving a problem.

Recent Posts

People Worry That AI Will Replace Workers. But It Could Make Some More Productive

These scholars say artificial intelligence could help reduce income inequality

A Sentinel for Global Health

AI is promising a better – and faster – way to monitor the world for emerging medical threats

The Age of Deception

AI is generating a disinformation arms race. The window to stop it may be closing

One Response to “ An “A” for Teamwork ”

Evaluation of tests and efficient response time to students is crucial. Being able to build test marking and space for feedback to elementary students and their families as a day-to-day practice will further support student learning.