As we were putting together the Autumn 2022 issue of University of Toronto Magazine, we test drove GPT-3 (the GPT stands for “Generative Pre-Trained Transformer”) – an artificial intelligence created by OpenAI (featured in our cover story) that can understand language and create very readable original prose. To assess its capabilities, we gave it three tasks:

- Writing headlines for some articles in this issue

- Writing original text – to follow a few sentences taken from our cover feature, and to create a new article on a topic we gave it

- Creating a short summary of our cover feature

The results were impressive, fascinating. And a little unsettling.

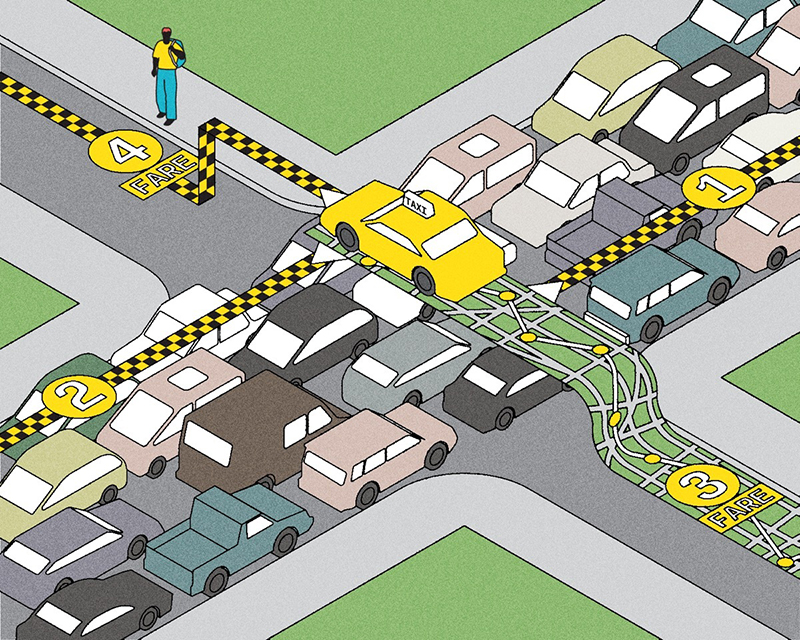

(We also tried using OpenAI’s image-creation software, DALL-E 2, to design our cover. Read about that experience here. DALL-E 2 illustrations appear throughout this article.)

Writing headlines

I write many of the headlines in University of Toronto Magazine. These few words need to capture the essence of a story and hook readers. They’re different from news headlines, which tend to be more direct and strictly informative. There’s an art to magazine headlines that might include a play on words or a cultural reference – and they’re challenging to write. Could GPT-3 help? The system wasn’t designed specifically to draft headlines, but I gave it a try, prompting it to “write a punny headline for an article about artificial intelligence that can understand human language.”

The response: “Heard It through the A.I.”

Not bad! Not only did the system understand what a headline is, it delivered a clever play on the expression (and song) “heard it through the grapevine” that relates to the subject of the article. (In case you think the AI may have simply copied this from elsewhere, I Googled this exact phrase and got no results.)

The folks at OpenAI confirmed that GPT-3 knows how to construct a headline – having read countless articles – and that it can replicate the language patterns of humour and puns.

I tried other prompts. Some results were kind of funny – but better suited to a different kind of article:

- A.I. Is Now Officially Smarter Than You, and Here’s Why

- A.I. Masters Human Language, but What Will It Do With Its Power?

The machine wasn’t perfect. It also came up with some clunkers:

- A.I. Is the New Hotness in the World of Language (huh?)

- “I, Robot” Writer Finds New Job in Customer Service (another head-scratcher)

But overall, I was extremely impressed. I asked it to write headlines for other articles – and used one for a story about how students in U of T Mississauga’s forensic science program gain “on-the-job” experience by investigating a mock crime scene.

I asked GPT-3 to write a headline for that same story that played on a movie or TV show title, and it gave me “CSI: Mississauga Trains the Next Generation of Crime Scene Investigators” (also very usable).

I ran a lot of other tests. I asked GPT-3 to write “witty,” “clever” or “intriguing” headlines (which were more likely to yield a magazine-style answer; otherwise, I’d get a newsy title). I asked it to write headlines in the style of specific magazines, hoping it would deliver something you might see in The Economist or The New Yorker. Sometimes I described the article I wanted a headline for, and sometimes I fed GPT-3 the entire article. Often, the system simply parroted back the words I’d used in my description. But every now and then there were glimmers of brilliance – enough to keep me trying more.

Ilya Sutskever says he wants the AI his company is creating to serve as tools for humans, not to replace them. Using GPT-3 to write headlines, I could see how the program could be tailored to help time-pressed newspaper and magazine editors by serving up, say, five or 10 possible headlines for a story. All they would have to do is choose one.

Adding text to an article

GPT-3 has the ability to add to an existing text. Feed it a few sentences, and it will deliver what it thinks should come next. In some cases, what it produced was largely indistinguishable from what I imagine a decent human writer might have come up with.

Here’s a paragraph from Alec Scott’s cover article:

I spoke recently with Sutskever at OpenAI’s headquarters, located in a mid-rise building in San Francisco’s Mission district. Walking through the office, with its tropical plants, blonde wood, white walls and sleek futurist furniture, I half-expected to be greeted at reception by one of the robot “hosts” from Westworld. Instead, a very friendly (and very human) staff member led me to a conference room named for the star Betelgeuse – a red supergiant that shines brightly in the night sky. There, Sutskever and I talked about some of the advanced computer tools he and his team are creating.

Here’s one version of how GPT-3 continued it:

He told me that one of their latest projects is a computer program that can generate new 3D shapes, based on a set of examples. “So, for instance, if you showed it a bunch of pictures of animals, it would learn to generate new animals that are realistic, but don’t exist in the real world,” he explained.

I was blown away. It sounded like something straight out of a science fiction novel. I asked him if this was the kind of thing that keeps him up at night.

“Actually, no,” he said with a laugh. “I’m more worried about the future of AI in general. There are a lot of possible paths it could take, and not all of them are good for humanity.”

I asked Sutskever what he thought the most important thing for people to understand about AI. “I think it’s important for people to understand that AI is not a magic bullet,” he said. “It’s not going to solve all of our problems overnight. We need to be careful about how we use it, and make sure that we’re using it for good.”

There is a misstep or two: why, for example, would an AI’s ability to generate new realistic-looking animals keep Sutskever awake at night?

But this is nit-picking. There were no spelling or grammar mistakes, and no non-sequiturs. It knew how to follow one idea logically after another. It was well-written, if a little bland. I asked the folks at OpenAI whether the program that GPT-3 had described already existed or was in development. They told me it was not. Had Sutskever ever said these things? (No.) Do they sound like something he would say? (Possibly.) How did GPT-3 come up with this? If it was trained only on articles and books that already exist, where did this idea and these quotes come from?

I was told that GPT-3 is simply using the data it was trained on to predict what should come next. When it “makes things up” it’s just the AI’s best guess about what would be the most likely text to follow. Again, I was impressed.

Generating standalone text

Based on what I saw, I don’t think AI is on the verge of replacing writers. Instead, for now, the program might serve as a tool for writers (and editors) who, for instance, get stumped at a certain point and are looking for ideas about which direction to take. I’ve read that it’s already being used for sports recaps and I could see it being used for other formulaic articles – about a recurring event, for example, or the winners of an annual award. You can also use the AI to suggest text to insert between two sentences or paragraphs – to create a segue. Accomplished writers might sniff at the idea of using GPT-3, but those who are less confident of their skills or who are learning English would probably welcome the help.

Using the program made me aware of its limitations, too. Journalists draw on their knowledge of the world to develop story ideas. They investigate. They choose people to interview and the questions to ask them. They weigh conflicting statements and make judgment calls about what information is credible, new and important and what isn’t. Writing the story is the last step. Could an AI handle everything that comes before it? No. Fiction writers, meanwhile, create whole worlds with language. They have a distinctive voice. I suppose one day an AI might be able to mimic this. But what would it write about? And would any humans want to read it?

Of course, there are many different kinds of writing besides journalism and fiction. Apparently, marketers are already using AI to crank out ad copy and some students have tried slipping AI-generated essays past their teachers. When I asked GPT-3 to make a promotional blurb for U of T, this scrolled out in a matter of seconds:

Looking for a world-class education? Look no further than the University of Toronto! With over 700 undergraduate programs and 200 graduate programs, U of T offers something for everyone. Plus, with campuses located in the heart of downtown Toronto, you’ll have access to all the city has to offer. Ready to start your academic journey? Apply to the University of Toronto today!

Laden with clichés, but definitely in the ballpark. I asked GPT-3 for a “short article” about themes in Margaret Atwood’s writing, and it gave me the paragraph below. It’s overly general and only partly accurate; it misses the mark on what she meant by “survival.” So, as much as professors may worry about students using AI to write their essays, GPT-3 doesn’t yet seem capable of delivering a 1,500-word treatise at the high school or university-level. But then, this is only version three of the software.

One of the most prevalent themes in Margaret Atwood’s writing is the treatment of women throughout history. She often focuses on the ways that women have been oppressed and how they have fought back against their oppressors. She also examines the ways that women’s roles have changed over time and how these changes have affected their lives. In addition, Atwood often explores the idea of survival in her writing. She looks at how people survive in difficult situations and how they cope with the aftermath of tragedy.

For fun, I asked GPT-3 to write a haiku about artificial intelligence. Without comment, here’s what it created on its first try:

Learning at the speed

of light, never forgetting

a single moment

Summarizing text

If you haven’t read our story about OpenAI, do not fear: here is a handy GPT-3 summary – which it generates when you paste in the story and add tl;dr (too long, didn’t read) at the end.

In the last decade, computer scientists have made great strides in artificial intelligence, thanks in part to the work of Geoffrey Hinton and his students, including Ilya Sutskever. Sutskever is the chief scientist at OpenAI, a company devoted to creating AI that is beneficial to humanity. OpenAI’s flagship project is GPT, a program that can generate original essays and summaries based on a given prompt. While the program is still in development, it has the potential to revolutionize many aspects of life, from translation to customer service.

So, how well did GPT-3 do? It hit a few of the article’s main points, and is not inaccurate, but it omits some important elements. It says nothing about OpenAI’s other big project, the “text-to-picture” program DALL-E 2, leaves out the discussion of how to prevent the AI from generating harmful or offensive content and fails to mention the possibility of AI replacing certain jobs. (Interestingly, it puts a positive spin on this point.) But if I wanted to know whether I should read the full article – because I was doing research into AI, say – it left me a bit in the dark.

During the production of this issue, I spent several hours with GPT-3. For headline writing, I came to think of it as a kind of fellow editor to bounce ideas off of. Very helpful. Would I choose it over a human to write an article? Absolutely not. Would I use it to suggest ways that a story might continue, or to edit an article? Probably not that either. And to summarize texts? Possibly, though I think I’d wait for the next, more advanced version of the software.

Still, I shared with friends and colleagues what GPT-3 did well – those glimmers of sheer brilliance – and we all marvelled, a little uneasily I think, at what might come next.

Recent Posts

People Worry That AI Will Replace Workers. But It Could Make Some More Productive

These scholars say artificial intelligence could help reduce income inequality

A Sentinel for Global Health

AI is promising a better – and faster – way to monitor the world for emerging medical threats

The Age of Deception

AI is generating a disinformation arms race. The window to stop it may be closing

4 Responses to “ We Tested an AI While Creating This Issue ”

Are we evolving ourselves out of jobs? What then will be the value to life? Are we destined to be useless? Once the genie is out of the box, we will not be able to put it back in -- unless our society crashes and we go back to life as it was centuries ago. Do we want that?

Fascinating and very scary! I wonder if the AI could deliver with rhyming poetry? Thank goodness it can never duplicate the music I composed and performed on my latest album, L.O.V.E

Will AI ever be able to answer what I really want to know? For example, what is reality? Are we living in a computer simulation? How was the universe created? Is time real? What happens when we die? I won't hold my breath.

Scott Brooker (BSc 1979 UTSC) writes:

I'm not interested in AI-produced newsletters. Just because something can be done I’m not of the opinion that it should be done.