It’s no secret that artificial intelligence has unleashed a variety of potential dangers. AI systems, for example, can be used to spread misinformation; they can perpetuate biases that are inherent in the data they have been trained on; and autonomous AI-empowered weapons may become commonplace on 21st-century battlefields.

These risks are, to a large extent, ones that we can see coming. But Roger Grosse, an associate professor of computer science at U of T, is also concerned about new kinds of risks that we might not perceive until they arrive. These risks increase, Grosse says, as we get closer to achieving what computer scientists call artificial general intelligence (AGI) – systems that can perform a multitude of tasks, including ones they were never explicitly trained to do. “What’s new about AGI systems is that we have to worry about the risk of misuse in areas they weren’t specifically designed for,” says Grosse, who is a founding member of the Vector Institute for Artificial Intelligence and an affiliate of U of T’s Schwartz Reisman Institute for Technology and Society.

Grosse points to large language models, powered by deep-learning networks, as an example. These models, which include the popular ChatGPT, aren’t programmed to produce any particular output; rather, they analyze massive volumes of text (and images and videos), and respond to prompts by stringing together single words based on the likelihood of that word occurring next in the data it was trained on. While this may seem like a haphazard way of building sentences, systems such as ChatGPT have nonetheless impressed users by writing essays and poems, analyzing images, writing computer code and more.

And they can catch us by surprise: Last year, Microsoft’s Bing chatbot, powered by ChatGPT, told journalist Jacob Roach that it wanted to be human, and was afraid of being shut down. For Grosse, the challenge is trying to determine what sparked that output. To be clear, he doesn’t think the chatbot was actually conscious, or actually expressing fear. Rather, it may have come across something in its training data that led it to say what it said. But what was that something?

To tackle this problem, Grosse has been working on techniques involving “influence functions,” which are designed to deduce what aspects of an AI system’s training data led to a particular output. For example, if the training data included popular sci-fi stories, where tales of conscious machines are ubiquitous, then this could easily lead an AI to make statements similar to those found in such stories.

He notes that an AI system’s output may not necessarily be copied word-for-word from the training data, but rather may be some variation on what it’s encountered. They can be “thematically similar,” Grosse says, which suggests that the AI is “emulating” what it has read or seen and performing “a higher level of abstraction.” But if the AI model develops an underlying motivation, this is different. “If there were some aspect of the training procedure that is rewarding the system for self-preservation behaviour, and this is leading to a survival instinct, that would be much more concerning,” says Grosse.

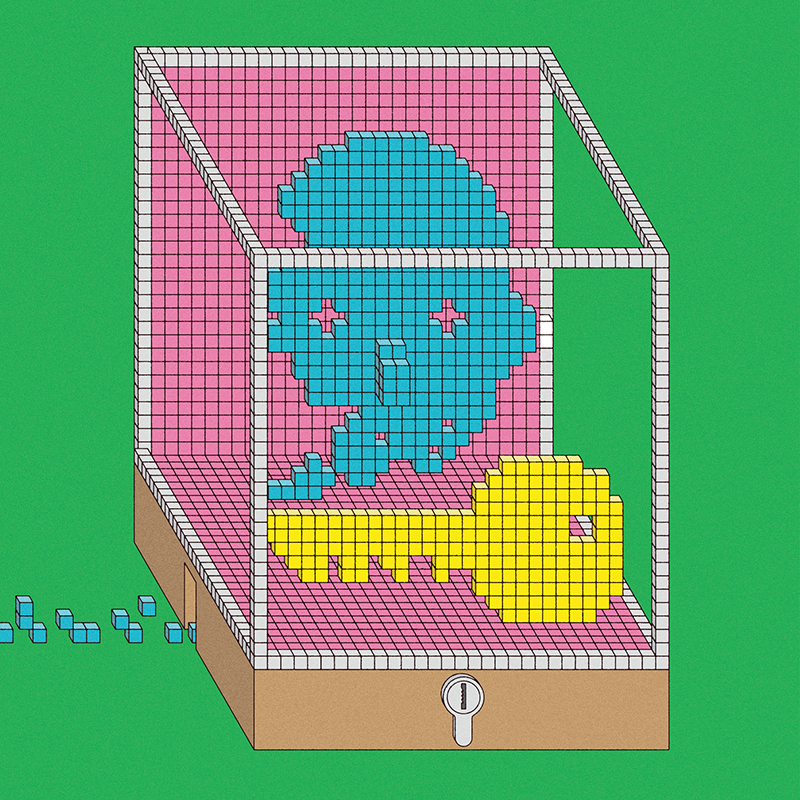

Even if today’s AI systems aren’t conscious – there’s “nobody home,” so to speak – Grosse believes there could be situations where it makes sense to describe an AI model as having “goals.” Artificial intelligence can surprise us by “behaving as if it had a goal, even though it wasn’t programmed in,” he says.

These secondary or “emergent” goals crop up in both human and machine behaviour, says Sheila McIlraith, a professor in the department of computer science and associate director and research lead at the Schwartz Reisman Institute. For example, a person who has the goal of going to their office will develop the goal of opening their office door, even though it wasn’t explicitly on their to-do list.

The same goes for AI. McIlraith cites an example used by computer scientist Stuart Russell: If you tell an AI-enabled robot to fetch a cup of coffee, it may develop new goals along the way. “There are a bunch of things it needs to do in order to get that cup of coffee for me,” she explains. “And if I don’t tell it anything else, then it’s going to try to optimize, to the best of its ability, in order to achieve that goal. And in doing that, it will set out other goals, including getting to the front of the line of the coffee store as quickly as possible, potentially hurting other people because it was not told otherwise.”

Once the AI model is developing and acting on goals beyond its original instructions, the so-called “alignment” issue becomes paramount. “We’d like to make sure the goals of AI are in the interest of humanity,” says Grosse. He adds that it makes sense to say that an AI model can reason, if it works through a problem step by step, the way a human would. The fact that AI can seemingly solve difficult problems is something that not long ago would have seemed miraculous. “That’s why we’re in a different situation from where we were a few years ago,” says Grosse, “because if you asked me in 2019, I would have said deep learning can do a lot of amazing things, but it can’t reason.”

For Grosse, the acceleration of AI technology, as illustrated by the capabilities of today’s large language models, is cause for concern – and a reason to refocus his research on safety. “I’d been following the discussions of AI risk for a long time,” he says. “I’d sort of bought into the arguments that if we had very powerful AI systems, it would probably end badly for us. But I thought that was far away. In the last few years, things have been moving much faster.”

Even if the frightening scenarios depicted in the Terminator movie franchise are more Hollywood than reality, Grosse believes it makes sense to prepare for a world in which AI systems come ever-closer to possessing human-level intelligence, and have some measure of autonomy. “We need to worry about the problems that are coming,” says Grosse, “where more powerful systems could actually pose catastrophic risk.”

This article was published as part of our series on AI. For more stories, please visit AI Everywhere.

Watch the second episode of What Now: AI, about the safety and governance of artificial intelligence, featuring computer science professor Roger Grosse and Gillian Hadfield, a professor of law and the inaugural Schwartz Reisman Chair in Technology and Society.

No Responses to “ Safety First ”

What if sentience is just higher levels of machine learning algorithms layered on top of higher level algorithms? At what level of intelligence are humans fully sentient? At what age, for example, do children learn about sarcasm? Can you argue that humans are not fully sentient until then? From the literature, it appears that AI agents can already pick out sentences that don't fit into a longer article.