Artificial intelligence already seems ubiquitous. In one version of the future, this bodes well: AI will turbocharge progress, lead to new ways to treat disease, warn us of public health threats and even generate new career possibilities. In a darker scenario, it could eliminate entire job categories and fuel a tidal wave of disinformation.

Ensuring the safe and responsible development of AI will be crucial. Read on as we explore how U of T researchers at the Schwartz Reisman Institute for Technology and Society, the Vector Institute and across the three campuses are harnessing the power of AI for good – and helping to shape its future.

Healing Power

![]() About eight years ago, artificial intelligence seemed poised to revolutionize health care. IBM’s much-hyped AI system, known as Watson, had rapidly morphed from winning game-show contestant to medical genius, able to provide diagnoses and treatment plans with lightning speed. Around the same time, Geoffrey Hinton, a U of T professor emeritus, famously declared that human radiologists were on their way out.

About eight years ago, artificial intelligence seemed poised to revolutionize health care. IBM’s much-hyped AI system, known as Watson, had rapidly morphed from winning game-show contestant to medical genius, able to provide diagnoses and treatment plans with lightning speed. Around the same time, Geoffrey Hinton, a U of T professor emeritus, famously declared that human radiologists were on their way out.

Now, it’s 2024: radiologists are still with us, and Watson Health is not. Have AI and medicine parted company? Quite the opposite, in fact: today, the marriage of disciplines is more vibrant than ever. Read the full article

Interspersed among these stories, you’ll find definitions for words that have taken on new meanings in the age of AI. This glossary was provided by Toryn Klassen (postdoctoral fellow) and Lev McKinney (master’s student), of U of T computer science.

Glossary: Sycophancy

Glossary: Sycophancy

Language models such as ChatGPT are trained to get approval from their current user. This can lead to sycophancy, where the AI responds with answers that seem to match the user’s beliefs, regardless of the truth. The AI might respond differently, for example, depending on whether it thinks you’re a liberal or a conservative.

AI Learns Everything It Knows from Humans. Will Humans Also Learn from AI?

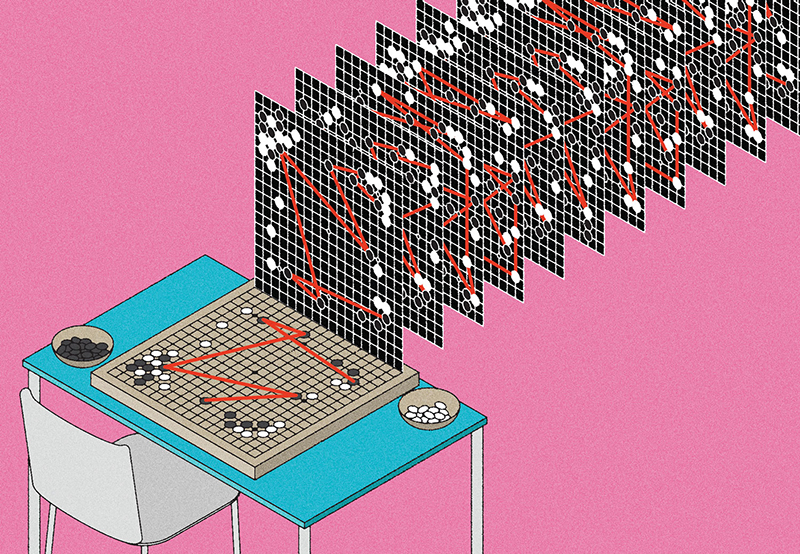

In 2016, an AI program called AlphaGo made headlines by defeating one of the world’s top Go players, Lee Sedol, winning four games of a five-game match. AlphaGo learned the strategy board game by studying the techniques of human players, and by playing against versions of itself. While AI systems have long been learning from humans, scientists are now asking if the learning could go both ways. Can we learn from AI? Read the full article

Glossary: Prompt

Glossary: Prompt

The input given, often by a human user, to an AI model to generate a response. the prompt for a language model such as ChatGPT, or a text-to-image model such as DALL-E, could be a sentence, question, command or other text. The prompt generally defines a task and implicitly provides context for what parts of the model’s training data are relevant to fulfilling it.

The Age of Deception

![]()

In an apparent interview with the talk show host Joe Rogan a year ago, Prime Minister Justin Trudeau denies he has ever appeared in blackface, responds to rumours that Fidel Castro was his father, and says he wishes he had dropped a nuclear bomb on protesters in Ottawa.

The interview wasn’t real, of course, and was apparently intended to be humorous. But the AI-generated voice of Trudeau sounded convincing. If the content had been less absurd it would have been difficult to distinguish from the real thing. Read the full article

Glossary: Alignment

Glossary: Alignment

An AI system is considered in alignment with a person or group when it applies its capabilities toward achieving the intended goals of that person or group. With language models, alignment is sometimes used in a narrower sense to mean the models follow user instructions but won’t output harmful content such as hate speech or advice on how to commit crimes.

Safety First

It’s no secret that artificial intelligence has unleashed a variety of potential dangers. AI systems, for example, can be used to spread misinformation; they can perpetuate biases that are inherent in the data they have been trained on; and autonomous AI-empowered weapons may become commonplace on 21st-century battlefields.

These risks are, to a large extent, ones that we can see coming. But Roger Grosse, an associate professor of computer science at U of T, is also concerned about new kinds of risks that we might not perceive until they arrive. Read the full article

Glossary: Guardrail

Glossary: Guardrail

A guideline, constraint or safety measure placed on an AI model to make sure it operates within predefined boundaries and doesn’t do bad things, such as output harmful content or exhibit bias or discrimination.

Tuning into Tomorrow

About a year ago, Stephen Brade started noodling around with a guitar composition. He set the unfinished piece aside, but returned to it when he realized that an AI-powered synthesizer he was developing might help him find the swelling yet spacious sound he was striving for.

Brade, a master’s student in the computer science department at U of T, is the creator of SynthScribe, a research project that aims to make synthesizers more user-friendly by allowing musicians to shape sounds through text and audio inputs rather than complex manual adjustments. Read the full article

Glossary: Jailbreak

Glossary: Jailbreak

Companies put in a lot of effort to get their language models not to say the f-word, produce hate speech, or tell you how to build dangerous weapons. But there are ways to design prompts to “trick” the model into outputting precisely the content that its designers had tried (by using guardrails) to prevent. These are called jailbreaks.

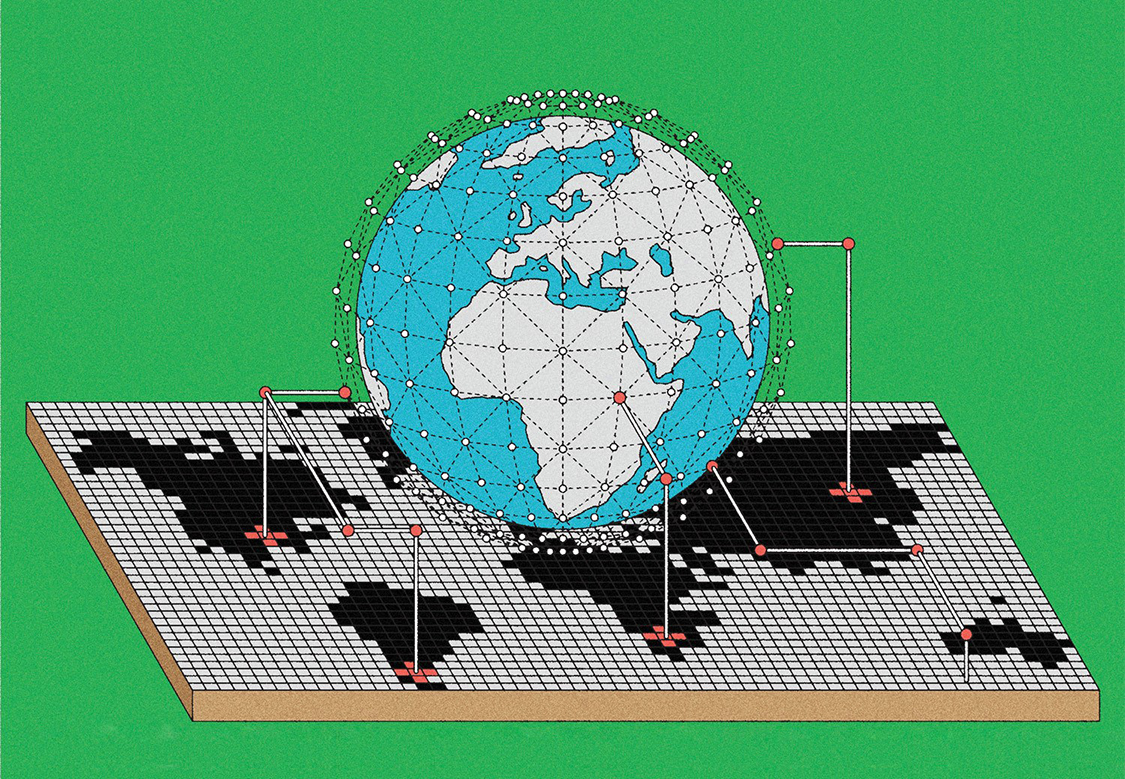

A Sentinel for Global Health

In late 2019, a company called BlueDot warned its customers about an outbreak of a new kind of pneumonia in Wuhan, China. It wasn’t until a week later that the World Health Organization issued a public warning about the disease that would later become known as COVID-19.

The scoop not only gained BlueDot a lot of attention, including an interview on 60 Minutes, it also highlighted how artificial intelligence could help track and predict disease outbreaks. Read the full article

Glossary: Token

Glossary: Token

A short sequence of characters, such as letters, punctuation marks and spaces that serves as the fundamental building block for analyzing textual data within AI systems. For ChatGPT, 1,000 tokens is about 750 words. Sometimes users are billed based on how many tokens appear in the inputs they give to, and outputs they receive from, AI language models.

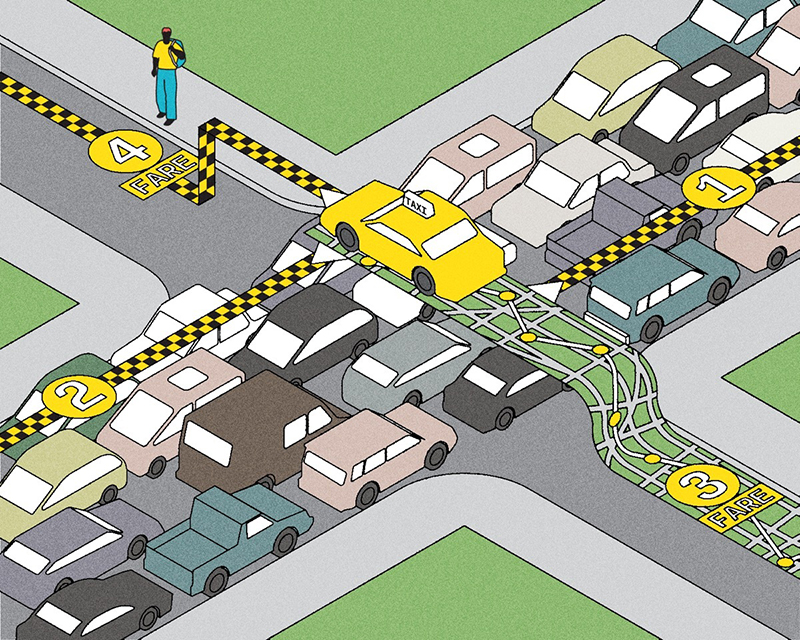

People Worry That AI Will Replace Workers. But It Could Make Some More Productive

It’s a common fear: powerful new AI tools will replace a large part of the labour force (making many people poorer), while enriching the companies that make and deploy AI. But could a different future unfold? Could AI, in fact, help reduce income inequality? Three U of T scholars argue that it could. Read the full article